The need for data workflow automation

The surge in interest in AI is focusing businesses on their data. Small companies often use third-parties to manage it, such as Google Analytics, HubSpot CRM, or Stripe payment processing. They might use a no-code tool such as Zapier to unify applications. These are fine for standard data and tasks that are not time sensitive.

Larger businesses may need more control over the data they use and how it is stored. They want flexibility over derived analytics and subsequent actions. Enterprise Resource Planning software allows this, but is not fully customisable. For that, businesses need purpose-built integrations, which firms such as my partners at MSBC Group provide, often for less than the fully-loaded, off-the-shelf alternatives.

If you are dealing in real-time, such as capital markets trading, with sensitive data such as medical records, or large volumes of inputs as in cybersecurity, then data pipeline management is on a whole, new level.

Common challenges in data pipeline management

I am a proponent of getting as far away as possible from 80% of routine tasks. This may be through automation, delegation, or stopping doing things that don’t matter. Clearing my unread email too often, is a time wasting indulgence I need to keep in check.

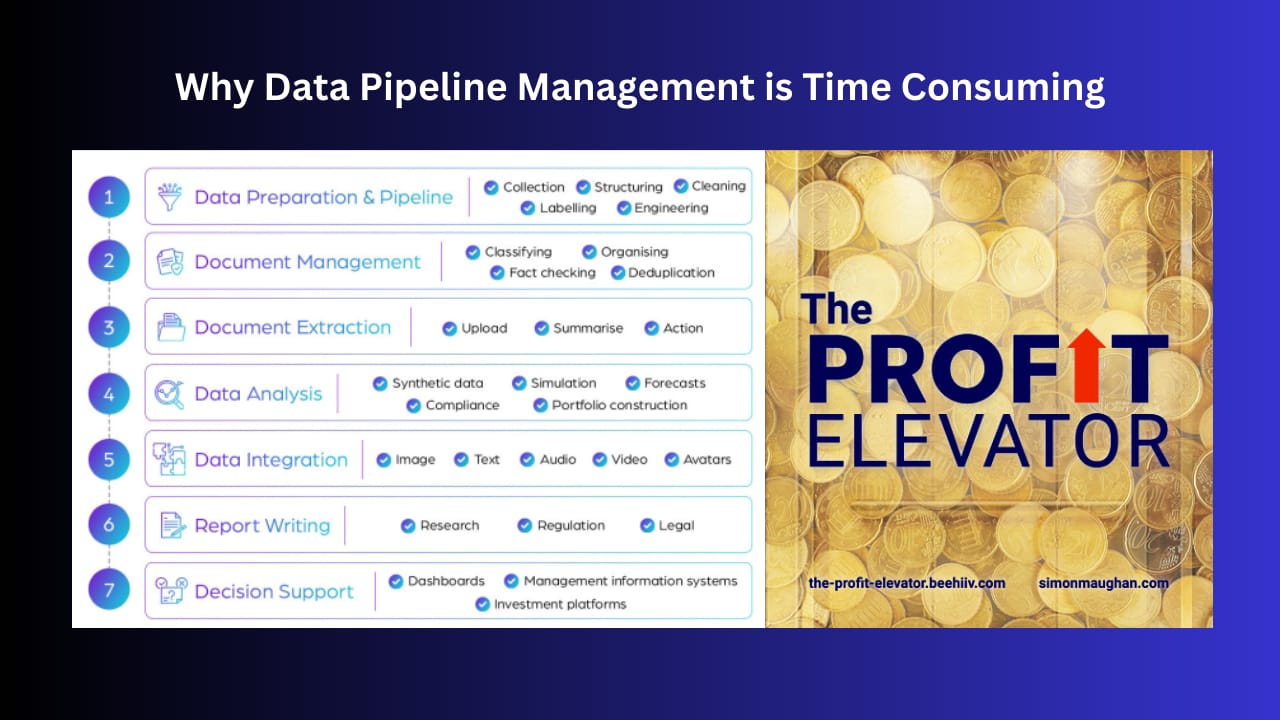

In 2017, IBM reported that 80% of time in data science was spent on data cleaning and preparation. A 2023 study by Forrester Research pegged preparation time at 70%, leaving 30% for analysis. Why, when there are many SaaS products promising to manage our data, does it take so long to prepare it?

Imagine you are collecting data for a new project. There are internal and external sources. Often these refer to the same type of data using different labels, contain insider terminology, or use similar terms to describe different functions. Your first task is to standardise the name used for each data set and function.

When you are collecting time series data, reporting periods may vary, for example measuring in minutes instead of seconds. Definitions change, often to meet regulations, and sources may be interrupted. Date formats are different in the US to much of the rest of the world. Sorting this out is frustrating, time-consuming work, but essential to the success of projects.

Companies with longstanding products run into these problems, as do businesses built by acquisition. Applications may be coded in different languages, while the original developers and product managers are long gone. Throw in the complication that much of the most recent data is audio and video, and data management may seem like an unconquerable mountain.

Best practices for managing data pipelines

The solution to any complicated task is to break it down into steps. Start with a simple use case that can be demonstrated from a small data sample. This is how we build AI proofs of concept. Firms do not grant access to sensitive internal systems, but do download samples of anonymised data that are enough to prove a process.

From there, additional data sources are added to round out workflows and provisions made for edge cases. New processes are added. The data team infills missing data, standardises its presentation and assigns common labels. Modular programming enables functions to be built in blocks, which fit together in solutions of different sizes.

Once processes have been finalised, data management becomes a series of checklists. Did the source update, is new data accurate, are there gaps and who reports these to data providers? Who takes care of data pipeline governance and security? These are typical 80% tasks to be outsourced. MSBC specialises in handling these jobs from its days looking after OTAS Technologies, the startup where I was COO.

The risks inherent in data management

The danger of using data from SaaS products is that it does not tell you anything of interest. The risk of creating workflows from API-based data ingestion, is that this is not the work you need doing. The greatest threat is that the data is inaccurate, or missing and you do not know what your provider does to bridge the gaps.

Questions to Ask and Answer

What data-driven decisions do I make?

Is the data pipeline process tried and tested?

Should I outsource the pipeline to save time and money?

Here are 3 ways I can help

Book a consultation to talk about all things AI.

Read our deep dive into data science.

Explore our use cases using accelerated computing.